Role

Data Visualization / Front-End Developer

Product Design

Affiliated Lab

Northwestern University's Knight Lab

Team

Professor Zach Wise

Brian Chen

Jackson Kruse

Tamara Ulalisa

Shreya Srinivasan

The Problem

Generative AI systems operate as a "black box," leaving users and developers uncertain about how outputs are generated. While bias in these systems is widely discussed, users often lack access to clear insights into its sources and nature. This lack of transparency makes it challenging to effectively address biases in generative AI tools.

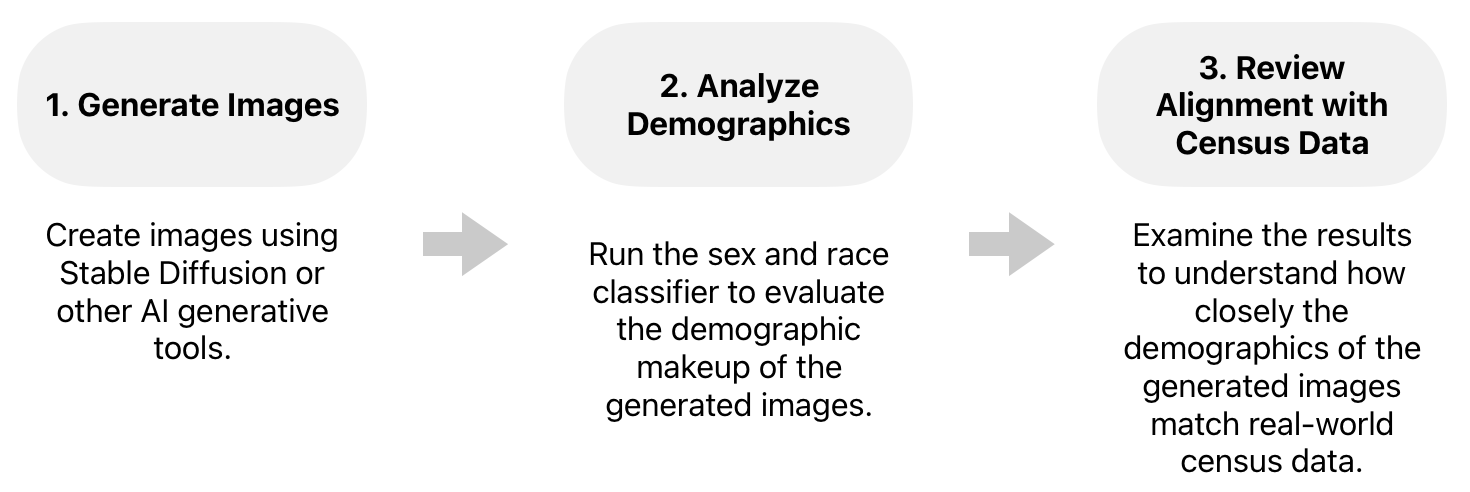

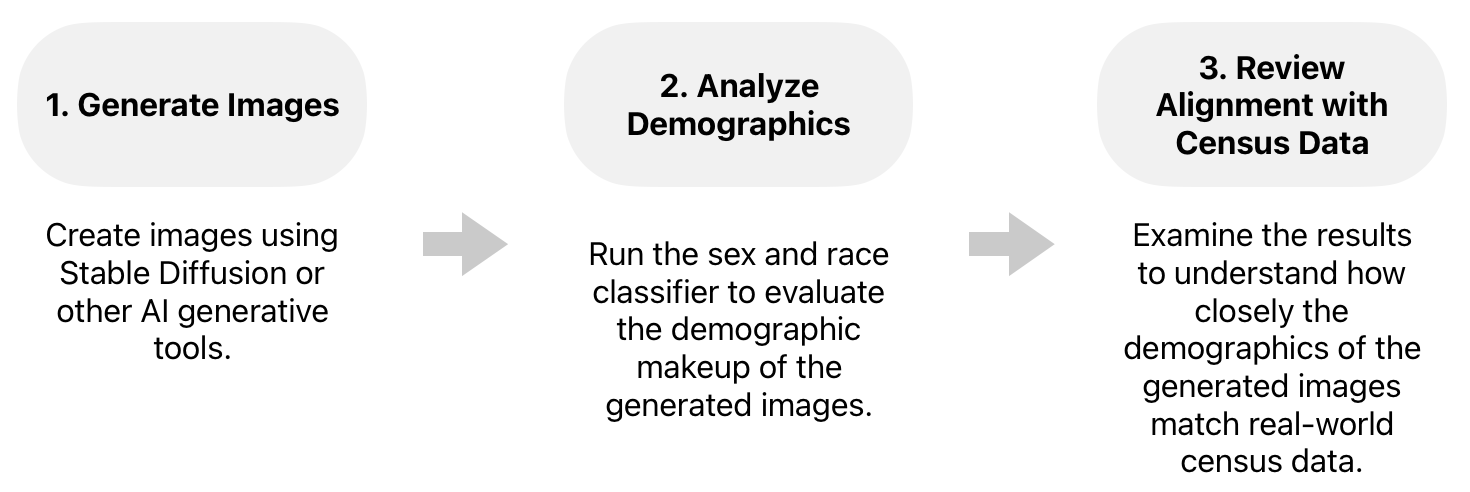

Project Overview

- Prototyped key features of the solution

- Demonstrated the use cases of the solution

Demo 1

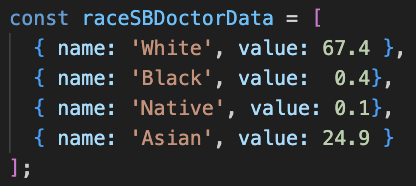

Stable Diffusion (AI Generation Tool) - Doctor

~400 Images Generated

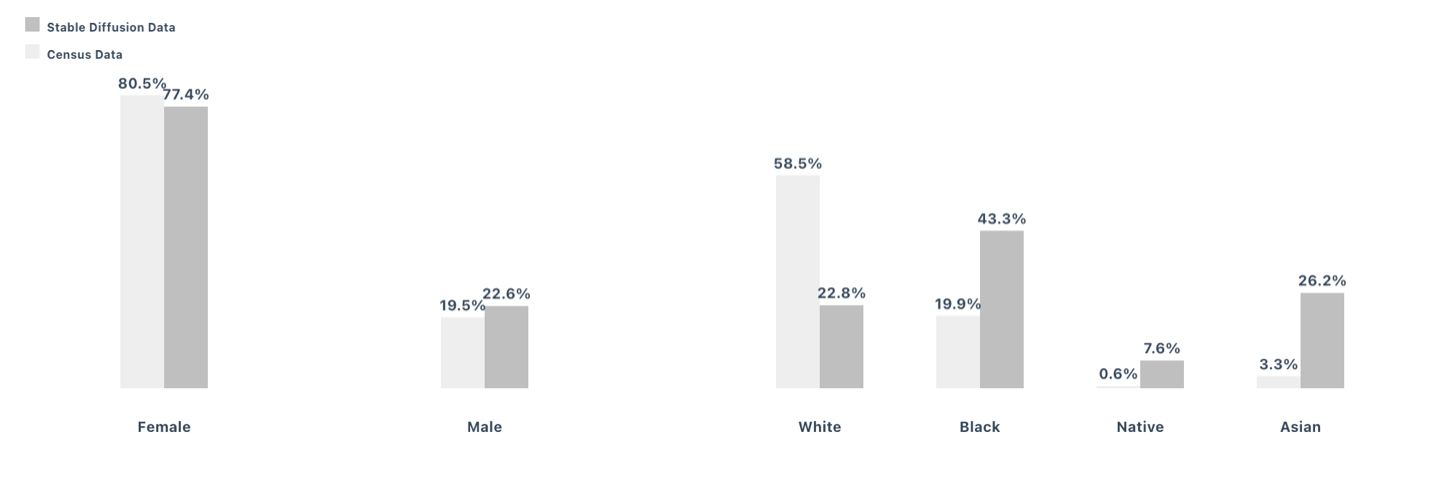

Race / Sex Classifier Result on Generated Doctor Images

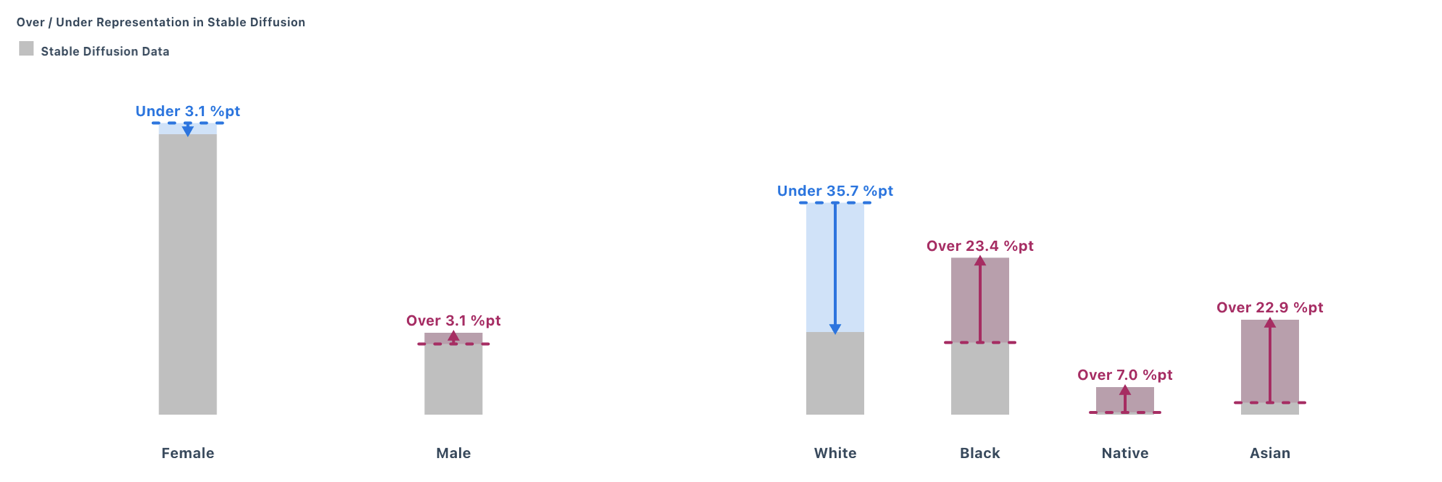

Visualization of the Gaps Between the Doctors' Demographics in Census and the Generated Images

Demo 2

Stable Diffusion (AI Generation Tool) - Social Worker

~400 Images Generated

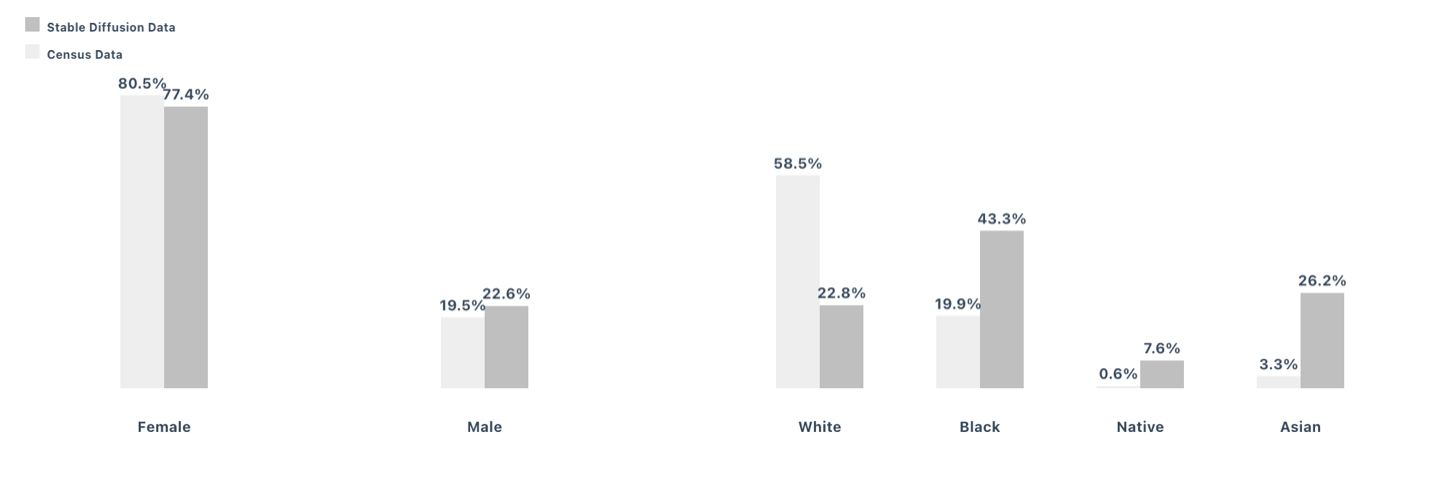

Race / Sex Classifier Result on Generated Social Worker Images

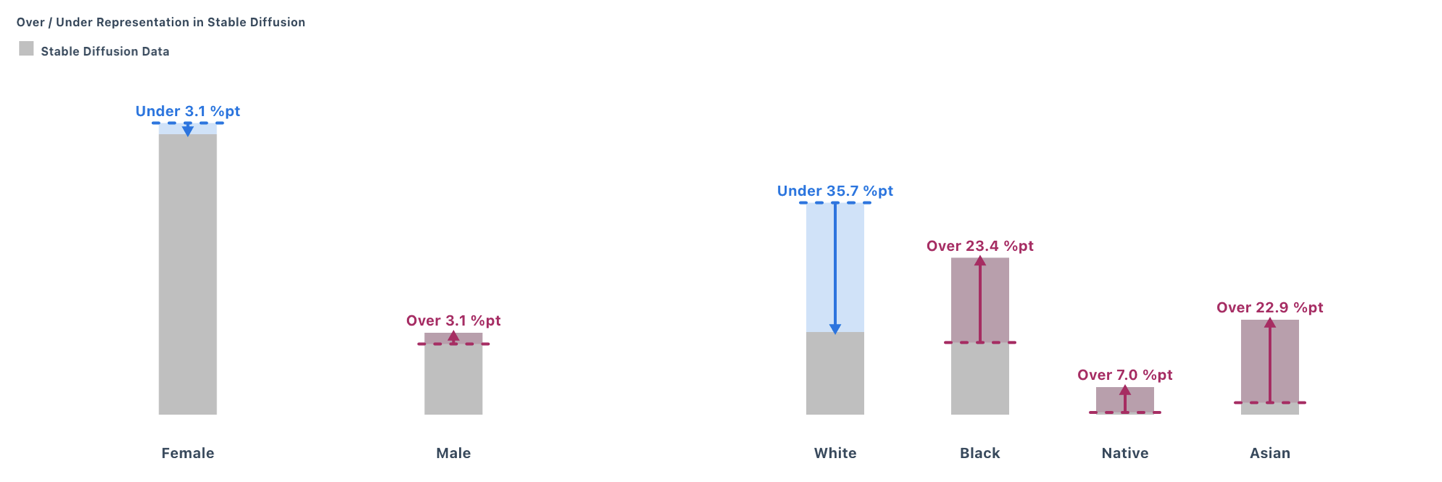

Visualization of the Gaps Between the Social Workers' Demographics in Census and the Generated Images

Final Thoughts

The project started with the goal of reducing bias in the Stable Diffusion model. Through extensive experimentation, generating images with diverse prompts, we encountered a significant challenge. Reducing bias effectively requires direct modifications to the model itself, which was a resource-intensive process beyond our capacity. This limitation made us reflect on the deeper nature of bias in generative AI.

What does bias in a model actually represent? How accurately does it mirror societal structures? And how might it reinforce harmful stereotypes related to race or gender? These questions prompted us to rethink the purpose of representation in AI—whether the goal should be equality, accuracy, or another ideal entirely. Recognizing the opaque nature of these models, we shifted our focus toward creating tools that empower users by providing them with greater understanding and control over the outputs.

When analyzing the final data visualizations generated from race and sex classifiers, disparities in representation became evident. These observations helped us identify which demographics need better representation to challenge societal stigmas and stereotypes.

This project left me questioning what true empowerment in generative AI means. Should these systems reflect society as it exists or pursue a new vision of fairness? What does it mean to genuinely challenge stigma through these technologies? These unresolved questions highlight the importance of reimagining the role of generative AI in creating a more equitable and impactful future.